In the last several weeks, I have been experimenting with solving the ARC‑AGI‑2 benchmark using multi‑agent systems built with LangGraph. It is a fascinating and very challenging benchmark that tests core reasoning and abstraction skills. Humans excel at it whereas current frontier models fail.

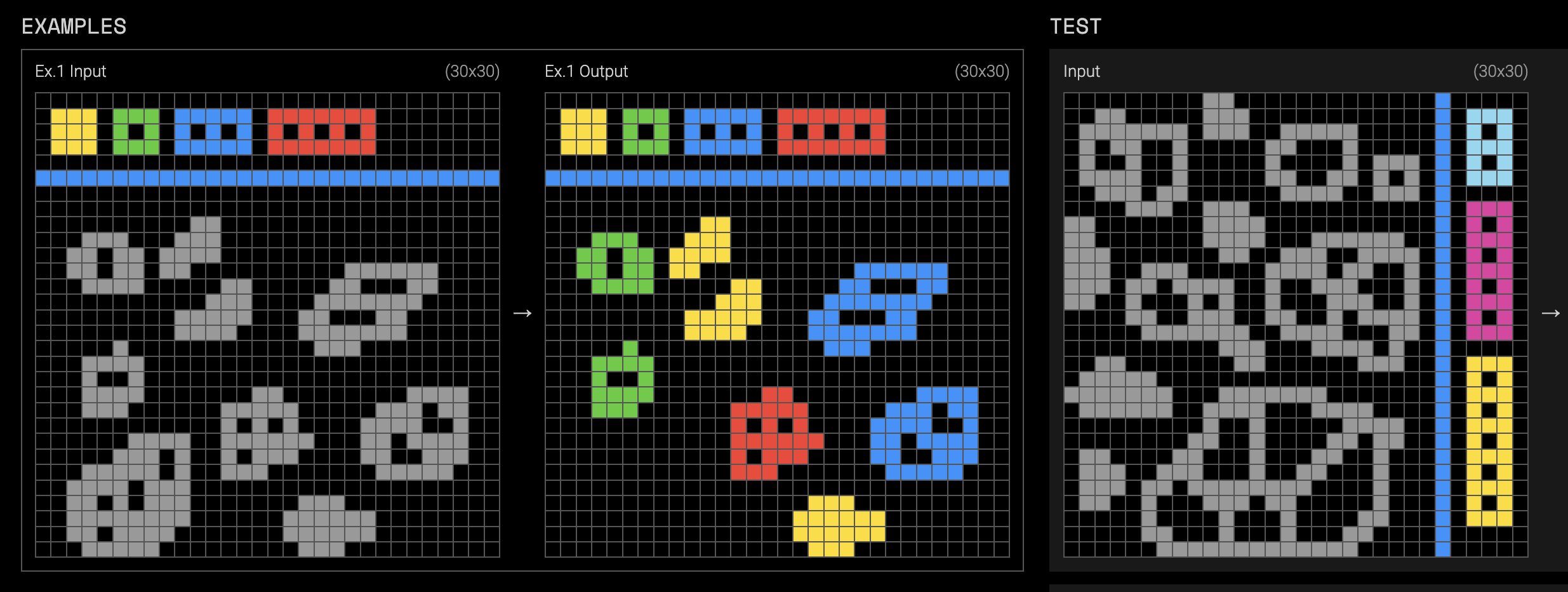

ARC‑AGI‑2 is a new version of François Chollet’s Abstraction and Reasoning Corpus. It’s a benchmark of grid‑based puzzles where each task consists of small colored grids, and you have to infer a transformation rule from examples and then apply it to new inputs.

Consider the task below. Can you figure out the rule and produce the correct output for the test input? You can try all the samples at the official ARC playground, here is the link to below task.

Each task consists of the following components:

- Several train grids: input → output examples that demonstrate the underlying transformation.

- One or several test grids: input only. You must produce the output grid.

There are multiple datasets:

- Training set: 1,000 public tasks, uncalibrated, intended for training models.

- Public eval set: 120 public tasks, calibrated, same difficulty distribution as the hidden eval sets.

- Semi‑private and private eval sets: 120 tasks each, hidden, used for Kaggle and final leaderboard scoring.

I’m not using the training set at all in my experiments. Instead, I treat ARC‑AGI‑2 as a pure test‑time reasoning problem: you get a new eval task, and you must figure it out “on the fly,” like a human would.

For the public training and public eval sets, we do actually have the expected outputs for the test samples too, so we can measure success offline. For the semi‑private and private sets, those outputs are hidden and only used by the competition infrastructure.

In all my experiments:

- I ignore the 1,000‑task training set entirely.

- I run my systems directly on the public eval set and evaluate pass/fail by comparing generated test outputs with the provided ground truth.

- I never tailor my approach to specific tasks; each task is “new” to the system.

- Everything is implemented as LangGraph multi‑agent systems.

- I use GPT‑5 with medium reasoning as the underlying LLM for all agents.

Attempt 1: Code‑Generating Multi‑Agent System

In the first attempt, I treated each task as a programming problem:

- Instruction agent

- Reads the task’s train inputs/outputs.

- Gets some structured metadata (like cluster summaries).

- Produces a natural‑language description of the transformation.

- Code agent

- Reads the instruction text.

- Writes Python code that takes an input grid and returns the transformed output grid.

- Verifier node

- Runs the generated code on every train input.

- Compares the code’s outputs to the expected train outputs.

- If they all match, applies the code to test inputs and returns the generated test outputs.

- If they don’t match, it feeds back the error details to the instruction agent for another round of refinement.

So the loop is:

Results:

- On the public training split: >50% of tasks solved (i.e., generated test outputs match expected outputs). Based on around 50 tasks tried.

- On the public eval split: around 6% success (based on ~40 tasks tried, not the full 120, due to cost).

My interpretation is that the eval tasks contain more intricate, compositional rules that require long, careful programs. Even when the code works on simpler train tasks, it tends to break down on the harder eval tasks. The “generate full program” step seems to be the bottleneck.

Attempt 2: Step‑By‑Step Instruction Execution (No Code)

In the second attempt, I removed code generation completely. Instead, I tried to have the model directly generate output grids, but in a step‑by‑step manner.

- The instruction agent produces one step of a transformation at a time.

- The generation agent applies that single step to the current grids.

So the loop might look like this:

- Instruction agent: “Step 1: Move each cluster of blue cells to the right edge, preserving shape.”

- Generation agent: applies that step to the working grid and outputs the intermediate result.

- Instruction agent: sees the intermediate result (and the ground‑truth train outputs) and generates “Step 2: Recolor each moved cluster according to this rule…”

- Generation agent: applies step 2, and so on.

Thus, instead of asking the LLM to apply a complex, multi‑step transformation in one shot, we:

- Have it decompose the task into simpler steps, and

- Execute these steps sequentially, with feedback after each.

- This is similar in spirit to the state‑of‑the‑art approach by J. Berman, but with a key distinction: in my approach, the instruction agent always operates one step at a time and the gen agent always applies that single step, not the entire transformation at once.

My idea was that breaking down the task and executing incrementally would help the model maintain a clearer internal representation of the transformation, reducing errors from complexity overload.

Results:

- Public training split: again >50% success.

- Public eval split: about 10% success (again, approximate, based on ~50 tasks).

So the step‑by‑step execution improved eval performance relative to Attempt 1, but not by a huge margin.

Attempt 3: Iterative Self‑Critique Over Train, Then Test

In the third attempt, I tried to better leverage the train examples before touching the test grids. I also moved away from stepwise execution back to full transformations, but added an iterative refinement loop.

The system still has:

- An instruction agent (full instructions, not just one step).

- A generation agent (produces full output grids).

The process:

Train samples phase

- Instruction agent generates a complete set of instructions for the transformation.

- Generation agent applies those instructions to the train inputs to produce candidate train outputs.

- We compare generated train outputs to expected train outputs.

- If they don’t match, we:

- Compute pixel‑wise differences.

- Feed those differences, plus the failure info, back to the instruction agent.

- The agent revises its instructions; generation is re‑run.

- We keep iterating until we get a perfect match on all train samples (or we hit a cost/time limit).

Test sample phase

- Once we have “successful” instructions on train, we copy those instructions and apply them to test inputs.

- The instruction agent then inspects the generated test outputs and decides:

- “These look correct,” or

- “They need more work,” in which case it refines the instructions again and we regenerate.

- We keep the latest best instructions as we iterate.

In short: use the train examples as a sandbox to converge to a robust description of the transformation, then adapt that description while inspecting the test outputs.

Results

Public training split: again >50%. Public eval split: around 16% success based on partial run. An improvement over Attempt 2, but still far from human levels.

The iterative nature helps with some tasks, but my sense is that once transformations cross a certain complexity threshold, the LLM’s internal representation just isn’t stable enough: it “forgets” corner cases, or overfits to particular train layouts and fails to generalize.

Extra Signals I Tried (And Why They Only Half‑Help)

Across all three attempts, I tried to give the agents more structure and feedback:

Cluster summaries

- For each grid, I compute connected components (“clusters”) and provide:

- Their positions, shapes, colors, counts, etc.

This helps a lot on tasks where the transformation is clearly cluster‑based (e.g., “move each red cluster to the top row”). But many ARC‑AGI‑2 tasks aren’t cleanly cluster‑based, so this only partially helps, and potentially biases the model away from correct solutions in non-cluster type tasks.

Pixel‑wise difference maps

- After generating an output, I compute per‑cell diffs vs. the expected output.

- I feed these colored diff‑grids back to the instruction agent.

- Intuitively, this should highlight “where things went wrong,” but in practice:

- The diffs are low‑level, and

- The agent still has to interpret them in terms of higher‑level rules.

- My impression: it’s hard for the model to jump from “these 12 pixels are wrong” to “I mis‑specified the rule about extending patterns along diagonals.”

I used GPT‑5 with medium reasoning in all attempts. The models can reason fairly well about local patterns and short chains of logic, but ARC‑AGI‑2 eval tasks demand compositional, global, and often brittle reasoning. Even with better signals, the bottleneck remains the model’s ability to form a crisp internal hypothesis and stick to it.

Open vs. Kaggle Competition Constraints

All of the above was targeting the open ARC‑AGI‑2 setting, where:

- Internet is allowed.

- Commercial models (like GPT‑5) are allowed.

- Compute and wall‑clock are only constrained by cost and practicality.

There’s also the official Kaggle competition, with different rules:

- No internet access.

- Only open‑source models (you can download them to use in your solution).

- Limited compute: 96 GB GPU memory, 12‑hour time limit.

For Kaggle, I started adapting Attempt 3 into a notebook:

- Replaced GPT‑5 with Qwen3‑VL‑8B thinking model.

- Kept the instruction + generation agent structure.

I ran into two practical issues:

- Qwen3‑VL‑8B likes to think for quite a while, but this is slow at deep reasoning chains, especially when you have multiple agents and many iterations per task.

- I only heard about the competition a short time before it ended in November 2025, so I had limited time to iterate on approach, models and infrastructure. I was exploring alternative open‑source models, but ran out of time before I could systematically benchmark them under Kaggle constraints. So I have no official Kaggle entry. For what it’s worth, here is the Kaggle notebook I was working on.

Why I Don’t Use the Training Set (And What That Suggests)

A lot of ARC‑AGI work focuses on training models on the 1,000 training tasks or on augmented versions of them. For example the prior state of the art approach by E. Pang generates a library of useful transformation programs by iterating over training dataset first. I’ve taken a different stance so far:

I deliberately don’t use the training set at all.

Reason: as a human, I don’t need to study 1,000 ARC tasks to be able to solve a new one. I can pick up a new eval or test task and reason it through directly. If our AI models or systems had robust, general reasoning, they should be able to do the same.

So my working hypothesis is: If a system cannot achieve good performance by pure test‑time reasoning on the eval tasks, then the limitation is primarily in the reasoning ability of the underlying model and no amount of pre‑training on the training set will fundamentally fix that—at best it will help the model memorize a richer library of patterns and heuristics.

Training‑based approaches do get higher scores than pure LLM prompting today, and they are absolutely valuable. But I’m skeptical that you can “data‑augment your way” out of a deep reasoning deficit. My interest is in approaches that:

- Either develops, or uses an available strong general reasoner

- Then builds tooling around it (like multi‑agent systems, verification loops, explicit representations) to harness that reasoning.

Repos & Future Work

The code for these experiments is public:

Attempts 2 and 3 (direct grid generation, stepwise + iterative)

I only evaluated on partial public eval tasks per attempt, so the exact success rates (6%, ~10%) are approximate, but the qualitative picture is clear:

- Training split: LLM‑centric approaches can clear >50% of tasks with some engineering.

- Eval split: a sharp drop to single‑digit / low double‑digit performance. These are genuinely hard reasoning tasks that current LLMs struggle with.

My plan:

- Continue iterating on the open setting and aim to beat the current public state of the art on the open leaderboard.

- Explore richer internal representations (graph‑like, program‑like) that the agents can manipulate explicitly.

- Prepare for ARC‑AGI‑3, which is slated to introduce interactive elements.

I’ll post follow‑ups as I try new agent designs and models—and if you’re experimenting with ARC‑AGI benchmark yourself, I’d be happy to collaborate or discuss ideas!

Leave a Comment

Your email address will not be published. Required fields are marked *